Abstract

We consider and propose a new problem of retrieving audio files relevant to multimodal design document inputs comprising both textual elements and visual imagery, e.g., birthday/greeting cards. In addition to enhancing user experience, integrating audio that matches the theme/style of these inputs also helps improve the accessibility of these documents (e.g., visually impaired people can listen to the audio instead). While recent work in audio retrieval exists, these methods and datasets are targeted explicitly towards natural images. However, our problem considers multimodal design documents (created by users using creative software) substantially different from a naturally clicked photograph. To this end, our first contribution is collecting and curating a new large-scale dataset called Melodic-Design (or MELON), comprising design documents representing various styles, themes, templates, illustrations, etc., paired with music audio.

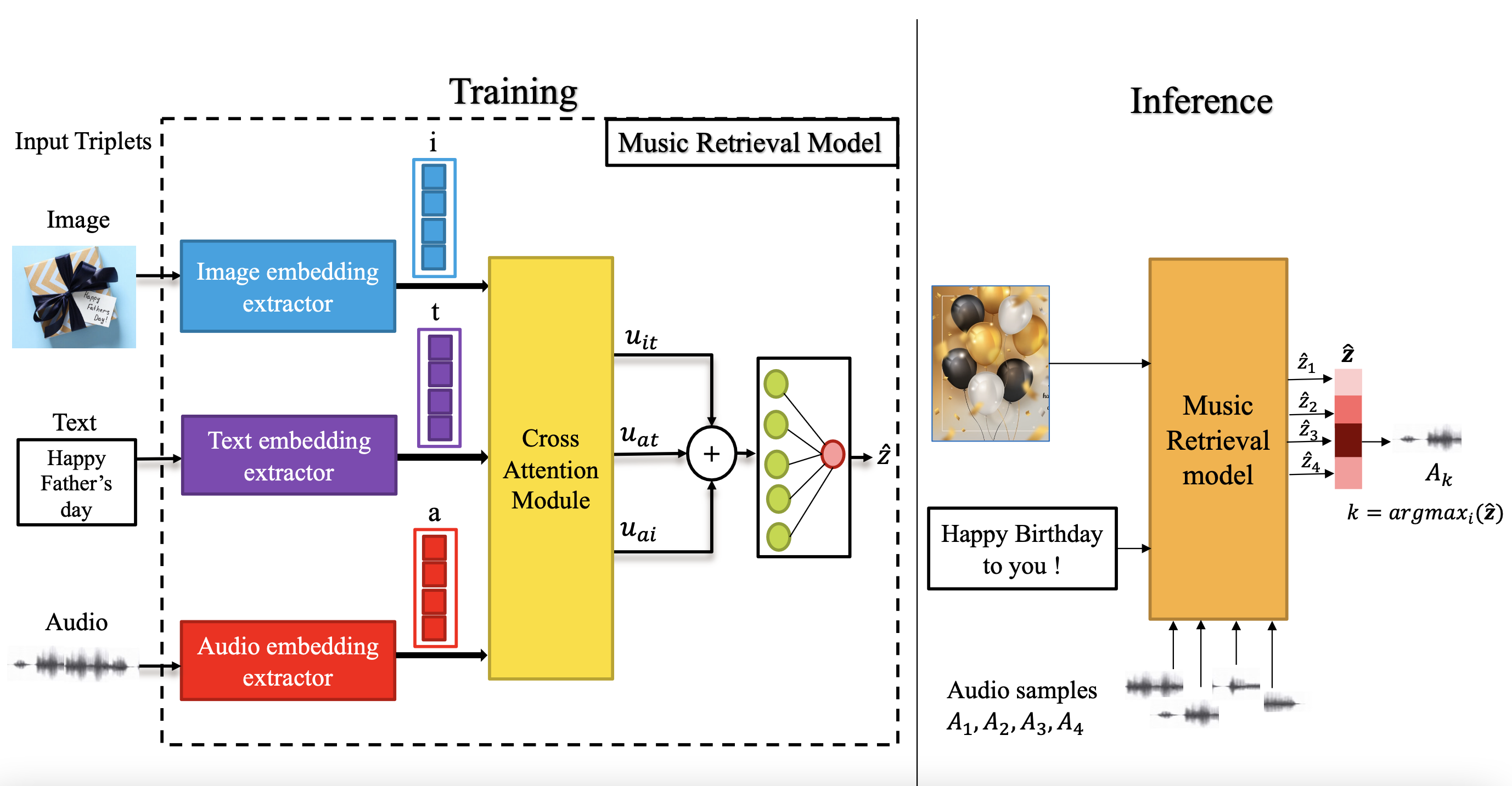

Overview Diagram

Block diagram of the proposed music retrieval model using image and text pair. Given our paired image-text-audio dataset, we proposed a novel multimodal cross-attention audio retrieval (MMCAR) algorithm that enables training neural networks to learn a common shared feature space across image, text, and audio dimensions. We use these learned features to demonstrate that our method outperforms existing state-of-the-art methods and produce a new reference benchmark for the research community on our new dataset.

Sample designed images from different mood categories

BibTeX

@article{singh2023audio,

title={Audio Retrieval for Multimodal Design Documents: A New Dataset and Algorithms},

author={Singh, Prachi and Karanam, Srikrishna and Shekhar, Sumit},

journal={arXiv preprint arXiv:2302.14757},

year={2023}

}

Acknowledgements

Special thanks to Prof. Sriram Ganapathy, LEAP lab, IISc .

Website adapted from the following template.